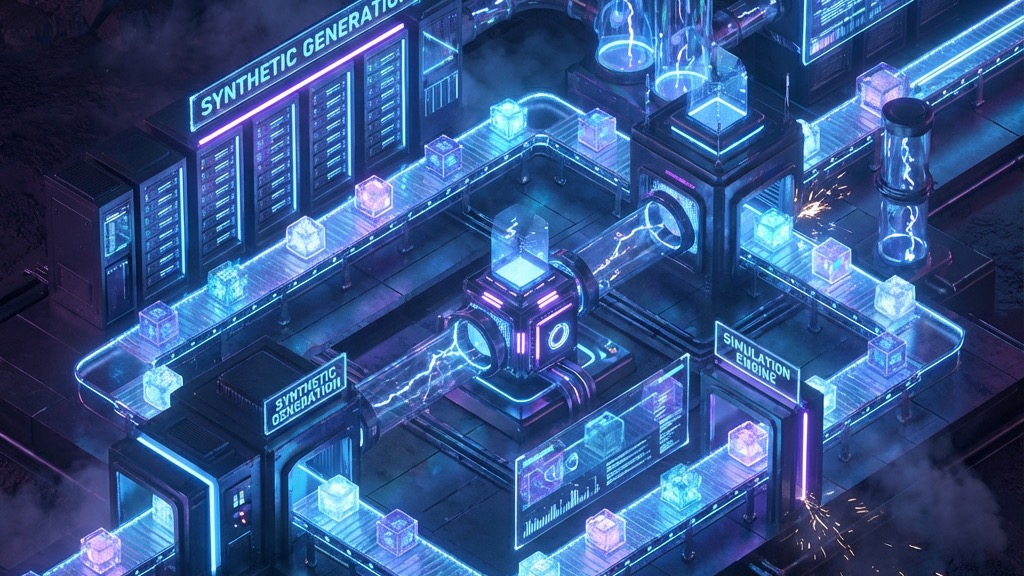

Data Factory: The Simulation Engine

Data Factory is a hyper-realistic synthetic data engine. It doesn't just generate random strings; it simulates business behaviors to stress-test modern data platforms.

The Problem

Building data platforms is hard. Testing them is harder. - Production data is sensitive (PII). - Staging data is stale. - Edge cases (e.g. thermal limit failure) rarely happen naturally.

Data Factory solves this by allowing developers to script reality.

Capabilities

1. Behavior Packs

Data Factory uses a plugin system called "Packs" to define domain logic: - Retail Pack: Simulates POS transactions, inventory drift, and customer churn. - IoT Pack: Simulates sensor telemetry, heartbeat loss, and threshold alerts. - Healthcare Pack: Generates HL7/FHIR-like claim streams with tunable error rates.

2. The "Live Ledger" Orchestrator

At its core is a serverless state machine (AWS Step Functions) that coordinates millions of events. - Batch Mode: Dumps terabytes of history to S3 (Parquet/JSON). - Stream Mode: Pushes thousands of events/sec to Kinesis/Kafka. - Chaos Mode: Intentionally corrupts schema or drops data to test downstream DLQs.

Architecture

The system is fully Infrastructure-as-Code (Terraform) and deployed per-tenant.

flowchart TD

UI[Console UI] --> API[API Gateway]

API --> Lambda[Orchestrator Lambda]

subgraph Engine [Step Function State Machine]

Gen[Generator Kernels]

Chaos[Chaos Monkey]

end

Lambda --> Engine

Engine -->|Batch| S3[(S3 Buckets)]

Engine -->|Stream| Kinesis[Kinesis Data Streams]

S3 --> Telemetry[Telemetry API]

Kinesis --> Telemetry

Telemetry --> UI

Key Components

- Generator Kernels: Optimized Python modules that inflate "Seed" templates into millions of variant records.

- Lifecycle Manager: Handles the spin-up and tear-down of ephemeral test resources.

- Telemetry API: Provides real-time "Events Per Second" metrics back to the console.

Why it Matters

Data Factory allows FMAI (and other platforms) to prove their worth. By generating "Bad Data" on demand, it validates that the governance layers actually work.